By Lena Anthony

First-year nursing students, U.S. Army soldiers and a middle school science class might seem very different at first glance. But when you consider the recent work of Cornelius Vanderbilt Professor of Engineering Gautam Biswas, the similarities become clear.

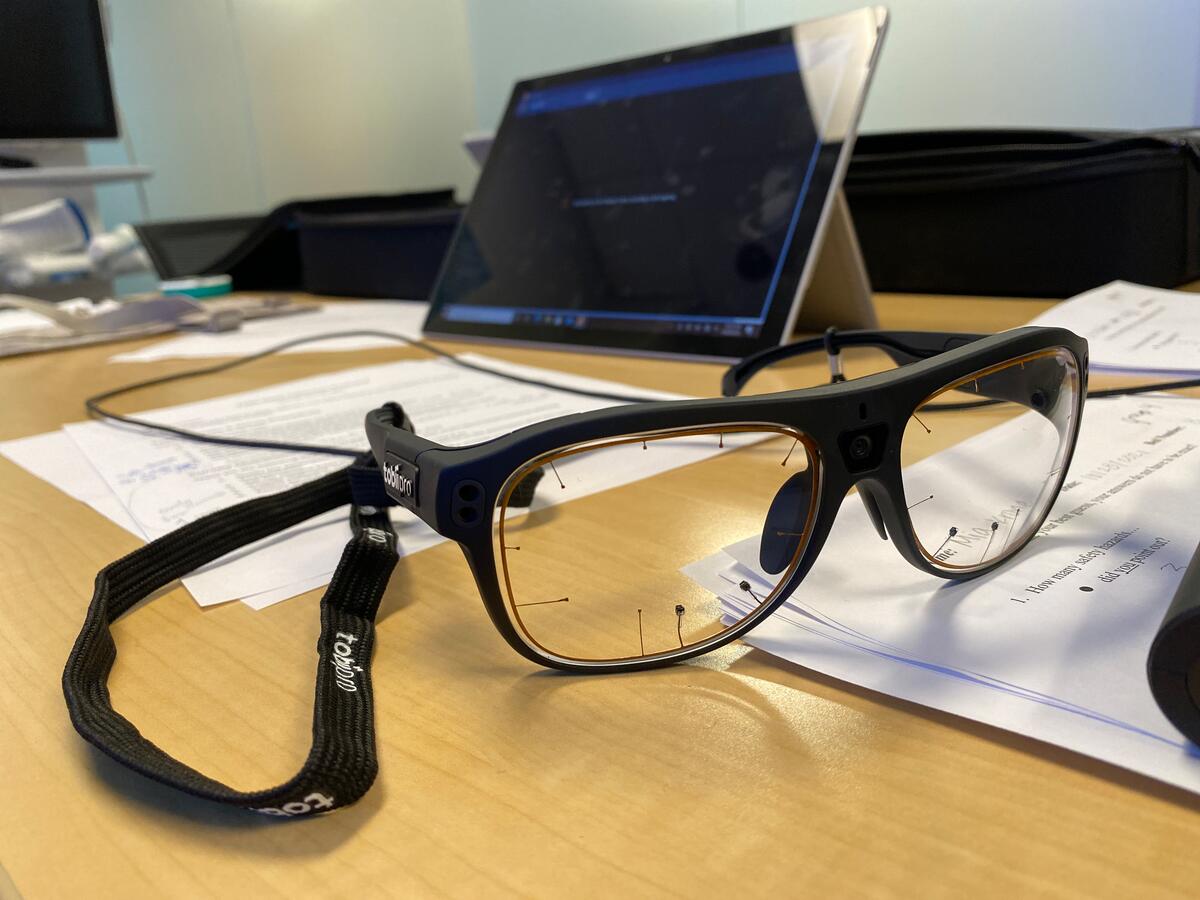

Each group has been a test case for Biswas’ research, which collects multimodal data such as clicks on a computer, hand gestures, eye gaze position and speech and then applies artificial intelligence and machine learning algorithms to help analyze how students learn and how trainees perform.

The goal is to provide constructive feedback to the learners and instructors so they can do their work more effectively, as well as to give useful insights to curriculum and simulation designers so they can improve learning tools.

“What students are looking at, where they’re standing, how they move around and interact with the other people in the room, all of these are important details that can be captured, analyzed and used to improve the learning process,” Biswas says. “Instructors can’t observe all of this detail on their own. Or if they do capture it in the moment, it is difficult for them to remember all the details and be able to point back to them later. The idea of the technology, then, is not to replace the instructor, but to empower them and help them provide more contextualized feedback to their students.”

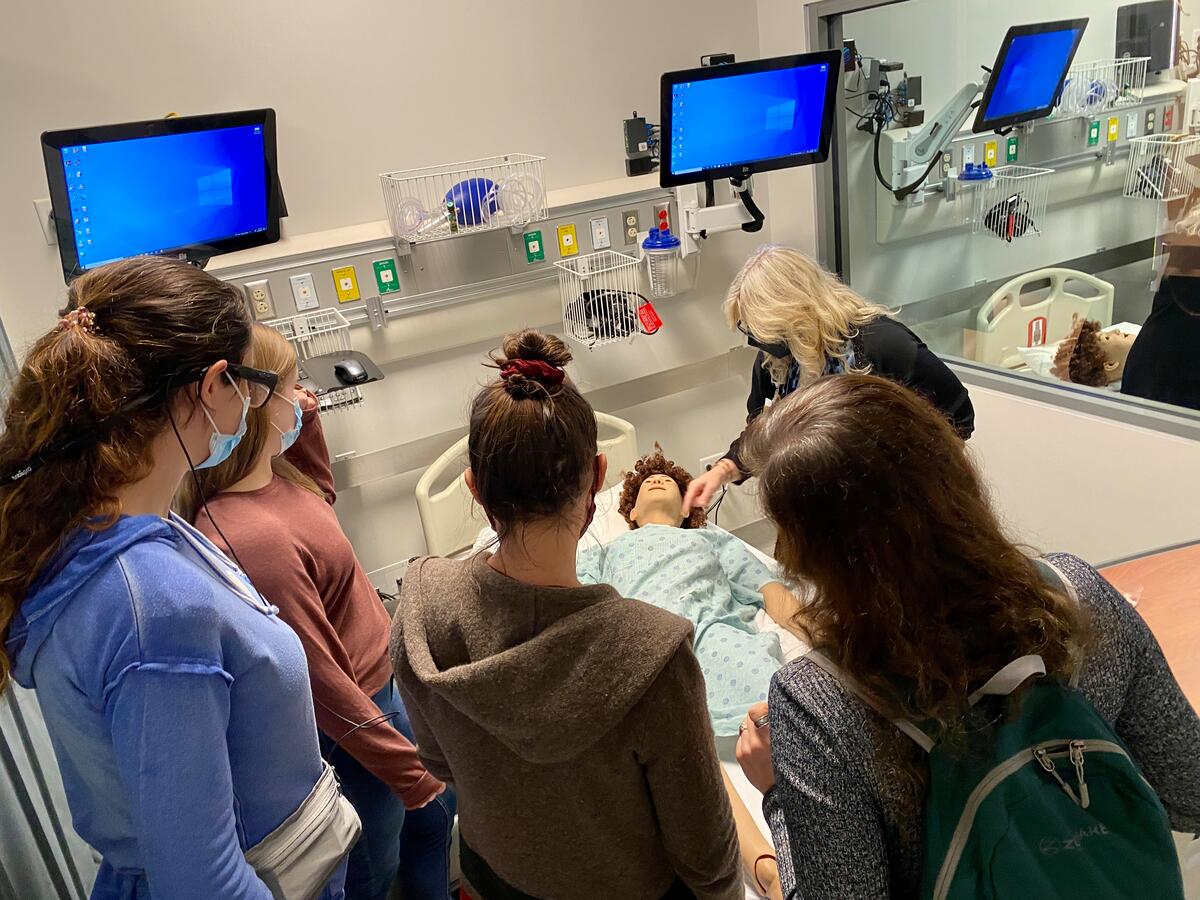

For a study that appeared in the July 2022 edition of Frontiers in Artificial Intelligence, first-year nursing students in the Vanderbilt School of Nursing simulation lab worked in teams to diagnose and treat an interactive manikin controlled by a simulation program and their instructor. The lab was rigged with a variety of sensors which the students wore, including eye-tracking glasses, which helped the researchers detect where the students moved, what they touched and where they looked as they diagnosed and treated the manikin’s condition. Meanwhile, machine learning algorithms operated in the background to detect relevant objects around the room and help piece together a timeline of who did what and when. A follow-up paper that will focus more specifically on the eye-tracking data and analytics has been accepted for presentation at the 2023 International Learning Analytics and Knowledge Conference.

This study and a similar one Biswas did with U.S. Army soldiers conducting a room-clearing exercise were funded by an Army Research Laboratory Award and a National Science Foundation Cyberlearning Award.

Mary Ann Jessee, assistant dean for academics in the School of Nursing, says this type of interdisciplinary collaboration has the potential to transform both the teaching practices of instructors and the professional capacity of graduates. For health care providers, the end result is more “safe, quality nursing care,” Jessee says.

Cracking the code of education

Biswas’ work in K-12 education falls under the $20 million National Science Foundation AI Institute for Engaged Learning, which was formed in 2021 and includes engineering and education faculty from Vanderbilt, as well as contributors from North Carolina State University, Indiana University, the University of North Carolina at Chapel Hill, and the educational nonprofit Digital Promise.

Peer-reviewed journals, multiyear grants from the NSF—Biswas sees these as progress in his efforts to apply AI to education. But there’s still a long way to go before AI can be seamlessly introduced into classrooms to benefit students and their teachers.

“We have a variety of sensors that can collect this data. We have machine learning experts who can help process this data and give us the results we’re looking for,” Biswas says. But, he observes, everything in between—such as processing the data online and using methods that are grounded in education theories to provide personalized feedback to students and help teachers analyze the situation in a classroom in a way that benefits students—is a technical, computational and, in some cases, intellectual challenge.

Biswas, who also is a professor of computer science and computer engineering, has been interested in AI since the beginning of his career, around 1984.

“When I got my Ph.D., artificial intelligence was well known, but it wasn’t the hot topic it is today,” he recalls. “My training was in pattern recognition and image processing, but to me, AI always had the potential to solve much more interesting problems. Luckily, it wasn’t difficult to make the transition, because the basic techniques are the same.”

Since joining Vanderbilt’s faculty in 1988, Biswas has focused on AI in a variety of applications. He is well-known for his role in the development of autonomous fault detection and fault adaptive control mechanisms for complex machines, like spacecraft, smart buildings, aircraft, and now unmanned aerial vehicles.

They may seem like different research topics, but anomaly detection in a machine is not that different from parsing the actions of a learner. “In each case, we’re applying computing methods to an engineering problem,” Biswas says.

A few decades ago, researchers at Peabody College of education and human development at Vanderbilt had an engineering problem on their hands, i.e., reams of paper-based student learning data that were too tedious to analyze. And so, John Bransford, the late Centennial Professor of Psychology and Education and director of the Learning Technology Center at Peabody, and Dan Schwartz, who co-created the “How People Learn” framework with Bransford, tapped Biswas to build a computer-based system to collect the necessary data and analyze it quickly using AI methods.

Biswas says this project helped open his eyes to the possibility of using the engineering models he builds to help teach science and other STEM topics to kids.

“Bransford and Schwartz were all about trying to teach students science using problems that are realistic,” he says. “The idea was to get kids excited to solve those problems and then introduce them to the scientific concepts behind them.”

During the 2022–23 school year, students at an academic magnet middle school in Nashville are piloting a computational program to help them learn about scientific processes, like flooding and water runoff after a heavy rainfall, as well as coding concepts that students use to build computational science models and solve engineering problems.

Biswas designed the program with collaborators from the University of Virginia and the nonprofit education research institute SRI International. Vanderbilt Engineering students developed AI and analytics methods to gain insight into students’ activities in the computing environment, which in turn helps teachers understand how students work to solve scientific problems and where they have difficulties. A future iteration of the program will feature an AI agent that can interact with the students as they work through the problems.

This, in fact, is the goal of the AI Institute for Engaged Learning—to create embodied AI agents that serve as participants and can play multiple roles in the learning exercise, such as mentor, peer or teachable agent.

“The agent might notice that one student in a group is doing all of the work, for example,” Biswas says. “Or if students seem stuck on a concept, the agent might suggest trying a new method. Because we’re capturing all this multimodal data—via cameras, microphones, eye tracking devices—our algorithms can analyze students’ learning processes and behaviors and respond accordingly via the agent.”

First, Biswas and his colleagues need data—lots of it—to train these agents. Luckily, just five minutes of interactions throughout a classroom can produce megabytes of data. Imagine the data from a three-week-long coding curriculum. “Schools don’t have the computing infrastructure needed to support our data needs,” he says. “This infrastructure exists, but not in education.”

To help address this challenge, Biswas and his colleagues at the AI Institute have applied for NSF funding to build a demonstration classroom at Vanderbilt. “We want to build a fully instrumented demonstration classroom, which will be a controlled environment where we can build the infrastructure, test it out and run our algorithms, and make sure we can do online analysis, before we try to migrate it to the noisy real world we will encounter in a classroom.”

Applying AI in the real world

But therein lies an interesting question. Can data collected in one classroom at one type of school (like an academic magnet school or a demonstration classroom at a university) translate to a different classroom setting that might have different demographics? Should the AI agent behave the same or differently?

“How students gesture, their poses and their speech might be quite different depending on the demographics of the classroom,” Biswas says. “Our challenge is training our algorithms to recognize those differences.”

Other ethical questions revolve around consent, privacy and security, but Biswas is not alone in working through these and other challenges. Part of the AI Institute, his multimodal analytics group includes Maithilee Kunda, assistant professor of computer science and computer engineering, research scientist Nicole Hutchins, PhD’22, and four graduate students. His colleagues also come from other parts of the university—the College of Arts and Science, Peabody College and the Wond’ry, Vanderbilt’s Innovation Center.

The AI Institute has made questions of ethics, diversity and social impact foundational to their approach, tapping Ole Molvig, assistant professor of history, as head of AI ethics across the institute.

“This type of cross-disciplinary, even cross-school, collaboration is precisely what makes working at Vanderbilt so exciting,” Molvig says. “As a humanist working in technology and innovation, the opportunity to work with colleagues from computer science and education on thorny problems that really matter has been incredibly rewarding.”