Multi-institute NSF-funded project to create AI tools to radically improve STEM learning

Vanderbilt University engineering and education faculty are part of a new $20 million research institute funded by the National Science Foundation that aims to create artificial intelligence tools to advance human learning and education.

The NSF AI Institute for Engaged Learning is one of 11 new AI institutes announced July 29 as part of a major initiative to advance understanding of AI technologies and how they can drive innovation to address real-world challenges. The agency has invested $220 million across 18 such institutes to date.

In this case, researchers will develop narrative-centered AI platforms and characters, or agents, to interact with and support a wide variety of learners. The institute will create a sophisticated framework that analyzes data from interactions to evaluate what works and what needs refinement to make the tools truly interactive and adaptive to the learning needs of individuals and of collaborating groups.

“By introducing these technologies, we make learning more ubiquitous, whether it is at a school, coffee shop, museum or at home,” said Cornelius Vanderbilt Professor of Engineering Gautam Biswas, lead researcher of the Vanderbilt team. “It also recognizes that learning happens more as a social process and that students learn better when they are immersed in authentic problem-solving scenarios.”

North Carolina State University is the lead institution, and other partners include Indiana University, the University of North Carolina at Chapel Hill and Digital Promise, an educational nonprofit organization.

VU computer science, education, ethics experts on board

The Vanderbilt share of the five-year project is $4.15 million. The team comprises Biswas, professor of computer science and computer engineering, and Maithilee Kunda, assistant professor of computer science and computer engineering; Corey Brady, assistant professor of learning sciences, and Noel Enyedy, professor of science education, both from Peabody College of education and human development; and Ole Molvig, assistant professor of history and communications of science and technology, founder of the emergent technology lab at The Wond’ry. Molvig is the AI ethics and privacy expert for the $20 million project, which was awarded under the NSF research thrust of Augmented Learning.

The institute will focus on three complementary areas:

- Creating AI platforms that generate interactive, story-based problem scenarios that foster communication, teamwork and creativity as part of the learning process.

- Developing AI characters capable of communicating with students through their speech, facial expression, gesture, gaze and posture. These characters, or “agents,” will be designed using state-of-the-art advances in AI research to foster interactions that effectively engage students in the learning process.

- Building a sophisticated analytics framework that analyzes data from students to make the tools truly interactive. The system will be able to customize educational scenarios and processes to help students learn, based on information the system collects from the conversations, gaze, facial expressions, gestures and postures of students as they interact with each other, with teachers and with the technology itself.

Such a “classroom of the future” might look like this: One group of students is interacting with a Smart Board, while other students work individually or in groups using AI-powered tools, and a teacher presents new material to a subset of the class. Multiple cameras capture the simultaneous interactions with the teacher, among students in groups and between individual students and their technological tools. As the class works through the scenario, the AI-powered analysis identifies adaptations to the individual and collective activities that optimize the progress that learners make in each working unit and as a whole.

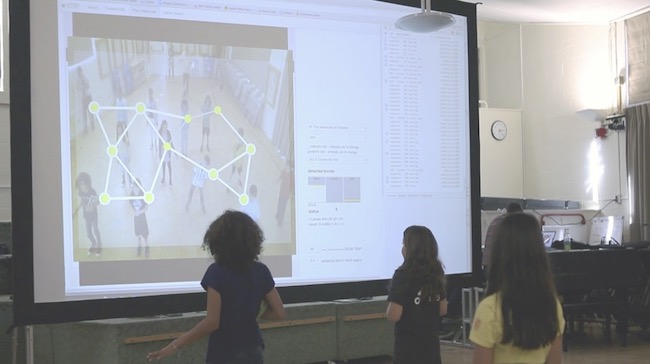

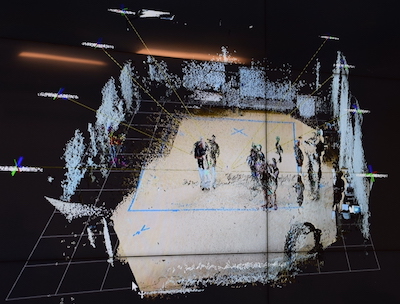

Enyedy’s lab, an instrumented classroom in the Vanderbilt Sony Building, features components of such a system now. In one exercise, students learn about honeybee behavior and communication by acting like bees, collecting nectar and taking it to their hive. Another exercise introduces students in kindergarten through second grade to the states of matter as they move about to create patterns that produce a solid, liquid or gas. They see their movement, captured by a camera array and rendered anonymously, on a large screen in the front of the classroom. Ongoing research, involving collaboration with Brady, extends this system to create a modeling environment for groups of young learners to create, test and revise simulations of their own through embodied interactions.

“Embodied modeling in a mixed reality simulation, where children learn about bees and pollination by becoming bees, is really just a form of technology-enhanced pretend play, but it’s a type of play that can easily be transformed into inquiry-based teaching and learning,” Enyedy said. “With the new AI tools that our institute will develop, mixed-reality environments like this one will be taken to the next level—the environment itself will be able to dynamically adapt to what a particular group of students is trying to do or is struggling with.”

Building on state-of-art, integrating AI as new kind of participant

The AI Institute work goes further, capturing not only movement but also discussion among the students and interactions with the teacher, adding the AI character, or computational intelligence, and using advanced analytics so the system learns as it guides.

“The state of the art now is that these agents exist, but they are designed to operate in specific problem scenarios through limited interaction modes,” Biswas said. “Our goal is to build comprehensive, adaptive environments that can adjust to teacher and student needs, can seamlessly support individuals as well as groups of students in classrooms and in informal learning environments, such as museums, and can interact with students along multiple modalities (speech, gestures, gaze and movements). We have the ambitious goal of developing technologies and analytics that will become an essential component of classrooms of the future.”

As envisioned, the computational intelligence serves as a nonhuman observer that can prompt, suggest and offer guidance to a teacher, an individual student or a group. For example, as it learns, it may recognize that one student is dominating the exercise or that another student does not appear to be participating—and nudge accordingly, Brady said.

Still, the AI will be integrated as a new kind of participant, not as an all-knowing, superior observer that dictates the narrative or whose observations are taken as valid. This approach disrupts some conventional thinking about artificial intelligence, Brady said, in which the computer is intended to emulate and improve upon human forms of perception and expertise. “We see opportunities for innovation when we identify new ways to partner with computational agents. The value of collaborating with a computer is enhanced when the computer offers a valid but different perspective. In this way, computers can add to the diversity of collaborating groups, building on research showing that such diversity is a critical asset to groups’ learning.”

By definition, this is a Big Data undertaking. Just five minutes of interactions throughout a classroom produces megabytes of data. Now imagine the data generated in a yearlong STEM curriculum for a single grade level.

“It is going to be challenging, but the impacts can be huge,” Biswas said.