Evaluating the severity of a burn injury – and whether it requires transfer to a Burn ICU or not – has been more art than science. About 79 percent of Total Burn Surface Area calculations are incorrect, sending more patients than necessary to specialized facilities, straining resources and compromising resuscitation and patient care.

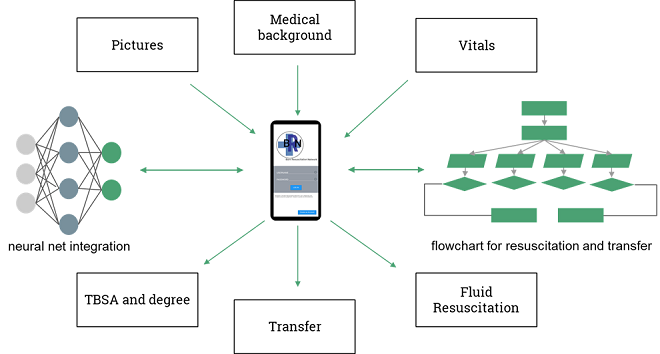

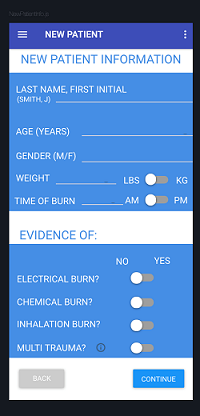

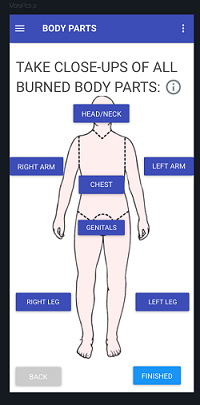

A senior engineering design team used artificial intelligence to improve the science. They wanted to develop more accurate and consistent estimates of patient burn injury severity and fluid resuscitation needs. The result is a prototype app with which a physician inputs patient vitals and history and takes photographs of the burned areas.

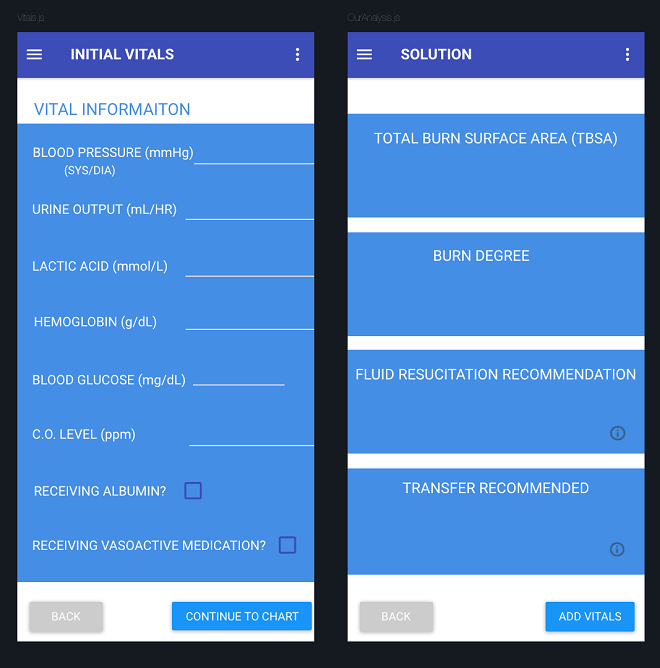

A neural network determines the burn surface area and degree from the photos, and the algorithm combines burn area information with the patient’s vitals to get fluid volume needed for resuscitation. It also compares the patient data against the American Burn Association transfer criteria to determine if transfer to an upper level burn center is necessary.

Eric Yeats, a computer engineering major, joined biomedical engineering majors Jacob Ayers, Hannah Kang, Dominique Szymkiewicz, Nora Ward and Thomas Yates, all BE ’19 graduates. Yeats designed the algorithm and trained the neural network with 275,000 images – small 50-by-50 pixel sections of 200 larger photos of burns of varied severity over varied body areas.

The app, which goes far beyond anything now available to clinicians, runs photo inputs through a convolutional neural network, where burned skin, healthy skin and background are differentiated. It works on all skin colors, ages and even accounts for tattoos.

The impressive effort won the BME design award, the Thomas G. Arnold Prize. The project, on which Dr. Avinash Kumar of VUMC’s Division of Anesthesiology and Critical Care Medicine continues to be adviser, is seeking grants and Yates is working on the project over the summer.

Next steps, in consultation with VUMC Burn ICU staff members, involve testing for performance accuracy, both the neural network burn area calculation component and the overall application functionality.

Estimating Total Surface Burn Area now is subject to human error, subjectivity and different body types, and preliminary misdiagnosis has significant consequences. Over-resuscitation of a patient due, including delivering too much fluid, can cause hypertension, kidney damage and other complications. Underestimating burn area can mean a burn patient does not receive enough fluid.

An estimated 40,000 people are hospitalized with burn trauma in U.S. hospitals each year, 30,000 of them at a dwindling number of specialized burn centers. The U.S. has roughly 123 burn ICUs, down from 180 in 1976, according to the American Burn Association.